TRK

“Technically” Responsible: The essential, precarious workforce that powers A.I.

An essay and related project, TRK are a part of the Feminist Data Set project investigating the machine learning pipeline and it’s implications in society.

There is a belief that as digital infrastructure and technology intertwines with society, it reconfigures politics and culture in real time. Historian Louis Hyman describes this belief as a misconceived sense of society updating and following technology1. Digital infrastructure and technology seizes upon opportunities, especially exploitable ones, and it is critical for communities and developers to identify and reconstruct these platforms when these new implementations demonstrate harm. While Artificial Intelligence (A.I.) presents a wide range of problematic issues within societies, this technology creates a specific kind of harm against the hidden, essential workers who train, label and ‘power’ it by labeling and categorizing data sets. Through obfuscation, atomization and the disenfranchisement of the workforce, the gig labor economy that is contracted to train computer algorithms operates within an exploitative model that perpetuates harm both to its workforce and beyond. Through analysis of these existing platforms, new interventions can be explored that reconfigure and proactively bring better outcomes to workers – and accountability and harm reduction to algorithms.

Employing low cost workers to train machine-learning algorithms is one of the most common uses for task-based gig labor. These systems employ computational statistics, using mathematics and large data sets to enable computer-assisted decision-making or predictions, thereby encouraging large scale operations to build and refine vast quantities of data, providing information to help train and build “experience” for the algorithm. Businesses dedicated to providing these tasks exist all over the world, particularly (but not limited to) in special economic zones in China, Malaysia, India, and so on. These are often daunting in scale, invoking parallels of factory floors or low-cost fashion manufacturing lines. For companies that do not have the logistics to deploy their own centralised facilities, Mechanical Turk and adjacent companies offer similar services, where a home-bound workforce complete similar tasks at a competitive price. The power structure of this system is stacked against this workforce, creating a vast inequality through the economics of scale, atomisation between individual workers and depersonalisation through the interface. The second order effects – deeply problematic outcomes facilitated by A.I. biases – offers a small window into the dynamics that affect this workforce. We argue that, alongside advocating for the ethical restructuring of gig work, focused interventions that improve worker incentives and platform governance could have a larger outcome on the industries that rely on their work.

Ruijin Technology Company in Jiaxian, China. Photographed by Yan Cong for the New York Times

In 2001, senior members of Amazon’s early management team filed a US patent for a crowd-sourced labor market system that enabled clients to purchase large amounts of labor from across the globe to complete small, repetitive tasks. The system, Mechanical Turk, would launch 4 years later. At the time, Amazon’s public marketing highlighted the application of Mechanical Turk as a cost-effective way to categorise data, optimise search algorithms, complete simple human-powered search actions and other sorting tasks that were both repetitive, but impossible for computers to accomplish2. By 2018 – according to company figures – the platform employed 500,000 workers, with wages estimated at an average of USD$2 per hour3.

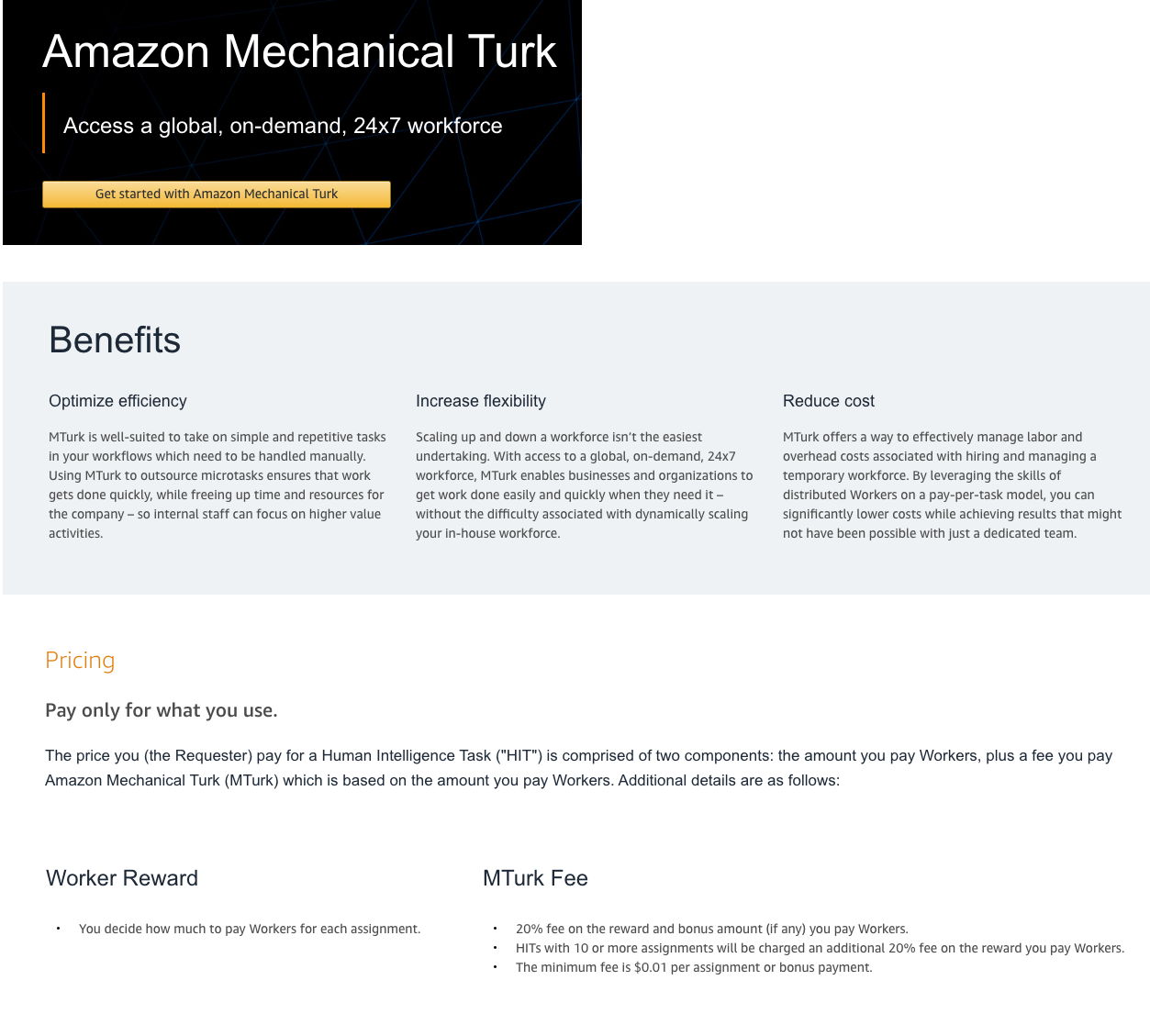

Screenshots from Mechanical Turk’s marketing homepage. Of note is the complete lack of visual representation of arguably one of the largest crowd-sourced workforces in the world.

As investment in – and deployment of – machine learning, computer vision and A.I. grew, Mechanical Turk and its competitors found a compelling market. Some algorithmic systems rely on statistical mathematics and modelling in order to make decisions, and this requires an ability to recognise variables and objects that go into making a decision. Whether classifying images of cars, obstacles and pedestrians to help autonomous vehicles understand the environment around them, or completing transcription services in order to help correct and train speech recognition services, each algorithm relies on mass human intervention – a rudimentary copying of human intellect and pattern recognition – in order to function. The original Mechanical Turk, a fake robotic chess player built by Wolfgang von Kempelen in the 18th century, allowed a human player to puppeteer a caricature of a “oriental sorcerer” cum grand chess master by concealing themselves inside the machine itself. By 2020, the online crowd-sourced labor market controlled by Amazon’s service of the same name was well and truly the hidden human brain of the Silicon Valley machine intelligence facade – an inter-generational sleight of hand.

To understand the consequences of nearly two decades of crowd-sourced labor, it is crucial to understand how widespread the use of these platforms are – both for workers and clients – and how these platforms interact with different societies. Decentralized labor of this kind includes tasks beyond image classification and text transcription4 for A.I.; contracted content moderation on social media platforms5, interpreting, evaluating and actioning requests by users of smart digital assistants (such as Siri6and Alexa7), surveys and research, interface or systems testing, mass-scale communication8, archival classification and more. The sheer reach and scale of this labor is both unprecedented and completely invisible. Attempts to examine or audit these systems are hindered by corporate and vendor secrecy, worker compartmentalisation and a heavy reliance on cultural and class divides between workers, clients and researchers. Early investigations into crowd-sourced labor markets focused primarily on the demographics9 of Turkers10 or pricing models to incentivise platform adoption11. Understanding the ethics, labor politics and biases inherent in how these platforms are designed relies upon whistleblowing, investigative journalism or research that traces emergent second-order12 impacts back to the platform itself.

Crowdsourced labor systems are marketed to workers as a flexible, always-available opportunity. Unlike service or physical-labor based gig platforms (such as Uber, Instacart, etc), Mechanical Turk and its competitors rely heavily on a “Bring Your Own Device, Work From Home, Work Your Own Hours” narrative, and payment for services is often positioned as supplementary income. Regardless, the “white collar” nature of this labor means that workers often work alone, in spite of being members of a global workforce, and this decentralization makes them uniquely vulnerable to invisibility, isolation, and exploitation.

With the workforce physically decentralized, the additional step of classifying crowd work as contractors creates a significant power imbalance, allowing vendors to completely dictate the terms in which workers operate. When changes are rolled out, these create conflicts that are sometimes mediated via publicity rather than through collective bargaining or other forms of negotiation. These can be financial, such as the 2019 pay dispute in which Rev.com silently cut the compensation to their “freelance transcribers by over 30 percent13. Additionally, the legal status of gig workers ensures a complete lack of medical or psychological support for workers exposed to traumatic situations. Worker isolation and exposure to traumatic conditions has been revealed to be an urgent failing of welfare at Amazon14, Facebook15, Rev16, Fiverr17 and many more. The scale of corporate18 indifference19 to the health of gig workers was well and truly revealed during the COVID–19 pandemic in 2020, where corporate policies denied healthcare and protection for individuals working in extraordinarily precarious workplaces.

When low-cost, politically fractured labor is contracted to infrastructure projects – such as training systems that rely on machine learning or A.I. – all of the political and social factors embedded in the gig labor platform contribute to the shape of the system being trained. The tension can be deeply political, such as Google’s deployment of gig workers to train automated drone targeting systems20 . Anatomy of an A.I. System21, a project by Kate Crawford and Vladan Joler, documents the enormous, planetary-scale impact of a single Amazon Alexa voice request. Alexa is a product of intense complexification, and the inclusion of gig labor workers adds resilience and redundancy to the generally unreliable UX of voice assisted interfaces. Although there are many problematic components within this system, the role of crowdsourced and contracted labor is seen as central to the successful operation of this system. In many ways, it is dependent on labor controlled via physical decentralization alongside economic factors that help render these workers’ participation invisible, both to each other and the wider world.

Mechanical Turk rarely acts as a visible advocate for its workers’ wellbeing. It provides little to no support and rarely investigates clients on the behalf of its workforce. It is in the interface itself where this is most obvious – there are no tools available for workers to interact with other workers. Without external collectively-supposed tooling, each worker is atomized and isolated from all others. When notified by a worker of an issue or complaint, it is to Amazon’s discretion to respond when and how they see fit. With no publicly accessible guidance, and without economic or labor rights oversight, there is little incentive to change.

In light of these conditions, a partnership between workers and US-based researchers has brought proactive self-reporting measures to the platform. Through Turkopticon22, workers can document instances of bad client behaviour or unreasonable job conditions both to each other and to Amazon. Researcher Lilly Irani explains the project as “allow[ing] workers to publicize and evaluate their relationships with employers. As a common infrastructure, Turkopticon enables workers to engage one another in mutual aid. We conclude by discussing the potentials and challenges of sustaining activist technologies that intervene in large, existing socio-technical systems.23” Turkopticon’s importance is obvious – by 2013, the service was handling 100,000 page views a month. The platform allows workers to support each other on a day-to-day basis, document their concerns over clients by rating them and their tasks, and create an accountability and responsibility the platform itself does not allow.

Beyond grassroots moderation systems, the economic incentives of this work is severely undervalued. The rates marketed on Mechanical Turk’s website appear reasonable, but tasks do not require fixed lengths and this leaves little room for unexpected delays or badly estimated timeframes. Furthermore, the free market approach to platform governance means that tasks are priced at the discretion of the client. Marketed income rates are merely suggestive pricing rather than an enforced pricing floor. Writing for the Atlantic, Alana Semuels details the systemic discrepancy around pricing: “A recent Mechanical Turk listing, for example, offered workers 80 cents to read a restaurant review and then answer a survey about their impressions of it; the time limit was 45 minutes. Another, which asked workers to fill out a 15-minute psychological questionnaire about what motivates people to do certain tasks, offered $1, but allowed that the job could take three hours.24”

There is a growing understanding of the effects of low pay workplace atomisation and the lack of medical and social support offered by these platforms – both for workers themselves and the broader systems they contribute to. According to research conducted by the University of California in 201025, the majority of Mechanical Turk workers are US and Indian nationals and more than half identify as female. What would an equitible, feminist Mechanical Turk look like? What would it take to move beyond this deeply exploitative and unethical model towards economic wellbeing, workplace cohesion and cooperative employment? Is such a system even achievable without further perpetuating digital colonialism facilitated by platforms, given the demographics of such a system?

To advocate for better working conditions, the existing economic pricing structures26 must be reformed. Given the international reach of such a service, and the assumptions made about the economic realities of these invisible workers27, rethinking pricing transparency and accountability and exposing this during task creation offers a promising starting point for creating dialogue between workers and clients. In TRK – an open source tool designed to calculate crowd-sourced gig labor tasks towards a living wage – the example of price negotiation is straightforward: the cost of tasks must take into account the minimum and living wage of workers within their geographical area. This is a simple intervention, but one that is absent from existing pricing models.

As an intervention, TRK’s Wage Calculator provokes change around the key problem of valuing labor and task complexity. The elements in task-based platform labor are amount, length of task, and price per task within the broader context of a worker’s full day. These elements are often and notably absent when generating the ‘worth’ of digital work. Location – which factors into living wage – should not be dismissed either. The project is an attempt to build platform equity for constructing data sets by highlighting labor and payment inequity in A.I. and machine learning systems.

In a series of interviews conducted with users, gig labor clients were asked about the scale of pay when establishing tasks. In many cases, clients were completely unaware of (or underestimated) the systemic level of labor devaluation, both through task complexity and time mis-estimation28. The Wage Calculator addresses this issue directly by bringing transparency to ignored factors during the task pricing process. Unlike existing platforms, the Wage Calculator frames proposed tasks within the context of an eight hour work day. From here, the interface calculates the task set against geographical labor law to produce a living-wage estimate. This creates a price negotiation within the TRK interface, translating vague metrics into a concrete relationship between time, tasks and worker conditions.

There are many meaningful opportunities to intervene in this system, but building new tools is not enough to safeguard the wellbeing of workers; the entire structure of Mechanical Turk and its competitor platforms require change. In workshops held in 2019, participants from over 10 countries29 were invited to speculate about potential developments and changes required to foster greater representation of intersectional feminist ideals in A.I. as a part of Sinders’ Feminist Data Set project. Participants had a difficult time ‘improving’ Mechanical Turk within its existing, for-profit corporate structure. This research found whether housed in a larger conglomerate or structured as a startup, the economic and workplace imbalances suffered by workers are amplified significantly by for-profit business structures and thusly cannot be intersectionally feminist.

The ubiquity of machine learning means that the methods used to build it demand scrutiny. This includes the working conditions for those who train and label data sets and data models. Given the necessity for platform reform, what alternative governance should replace these corporate structures? Perhaps this is a key opportunity for platform co-op models, where localized governance gives greater wellbeing and control to workers, and increased visibility and localization bringing additional scrutiny to data labeling and training platforms. Beyond operating these platforms in the national interest – workshop participants suggested structuring these platforms as embeds within local government, included as part of a public library or established a large nonprofit – the adoption of worker-moderated tools such as Turkopticon could be combined with a simpler, open-sourced infrastructure governed by effective labor politics30. For precarious workers, non-profit or public works structures by their nature invite additional regulation, support and transparency commitments. This is clearly demonstrable in other speculative or real world alternates for worker-owned governance models, particularly from outside of the US, for example Shift31, an autonomous Russian trucking network or Libre Taxi, a community-focused Uber alternative32. Implementing alternative governance in these machine training centers could create additional opportunity to proactively intervene in opaque ‘black-box’ algorithms and their unintended consequences.

Regardless of the opportunities for change, the ethics of low-paid gig work remain urgent and problematic. This is the case from the platform down, where low-paid work is completed on hardware and networks machined from environmentally and socially disastrous practices. With so much at stake, it is certainly not enough to focus entirely at the platform level. Our responses must foster labor rights, worker equity, and socio-technical33 participatory design. We must acknowledge those who work within and for these systems and directly involve and design with these workers. Machine learning and A.I. will always be controversial, but framing equity across all labor that goes into its design is something creators, technologists, artists, and society can and should aim for – creating intersectional structures that foster ownership and cooperative determination for the workers, thereby increasing the likelihood for fairer systems. Technology must reflect those that work within it, build it and use it, and not just the handful of people and companies that own it.

Caroline Sinders & Cade Diehm

From Isolation, Spring 2020

trk.network

Footnotes

- 1https://www.youtube.com/watch?v=5btapJs60WI

- 2https://web.archive.org/web/20080419030047/http://requester.mturk.com:80/mturk/welcome?variant=requester

- 3https://www.behind-the-enemy-lines.com/2018/01/how-many-mechanical-turk-workers-are.html

- 4https://www.rev.com/freelancers/transcription

- 5https://www.theverge.com/2019/2/25/18229714/cognizant-facebook-content-moderator-interviews-trauma-working-conditions-arizona

- 6https://www.theguardian.com/technology/2019/jul/26/apple-contractors-regularly-hear-confidential-details-on-siri-recordings

- 7https://www.cbsnews.com/news/amazon-workers-are-listening-to-what-you-tell-alexa/

- 8https://theintercept.com/2020/01/16/pete-buttigieg-amazon-mechanical-turk-gig-workers/

- 9http://crowdsourcing-class.org/readings/downloads/platform/demographics-of-mturk.pdf

- 10A portmanteau of “Mechanical Turk” and “Worker”, a “Turker” is a colloquial term for an individual who trades their labor within a crowd-sourced task platform.

- 11https://people.eecs.berkeley.edu/~bjoern/papers/singer-pricing-hcomp2011.pdf

- 12A second order effect is a consequence of a decision that is directly related to, but different from the immediate result of said action.

- 13https://gizmodo.com/there-is-a-point-where-a-person-cant-do-anymore-1839808189

- 14https://www.theatlantic.com/business/archive/2018/01/amazon-mechanical-turk/551192/

- 15See (2)

- 16https://onezero.medium.com/i-can-hear-the-suffering-rev-exposes-freelance-transcribers-to-violent-disturbing-content-4244758f832c

- 17https://www.polygon.com/2017/2/14/14615142/what-is-fiverr-pewdiepie-anti-semitism

- 18https://www.theverge.com/2020/4/10/21216172/amazon-coronavirus-protests-response-safety-jfk8-fired-covid-19

- 19https://theintercept.com/2020/03/12/coronavirus-facebook-contractors/

- 20https://theintercept.com/2019/02/04/google-ai-project-maven-figure-eight/

- 21https://anatomyof.ai/

- 22https://turkopticon.ucsd.edu

- 23https:// crowdsourcing-class.org/readings/downloads/ethics/turkopticon.pdf

- 24https://www.theatlantic.com/business/archive/2018/01/amazon-mechanical-turk/551192/

- 25https://pdfs.semanticscholar.org/2261/5775c75ba7a227bce389532a6b2cae7c4ab7.pdf

- 26See (9)

- 27For example in workshops conducted as part of the Feminist Data Set

- 28https://www.nytimes.com/interactive/2019/11/15/nyregion/amazon-mechanical-turk.html

- 29Participants were from India, the United States, Turkey, Germany, the United Kingdom, Ireland, China, South Korea, Brasil, Mexico and other countries.

- 30http://laborcenter.berkeley.edu/union-effect-in-california-1/

- 31Shift, Dmitry Alferov, Liza Dorrer, Christian Lavista, Arthur Röing Baer, 2017 Strelka Institute

- 32https://libretaxi.org/

- 33Entanglements and Exploits: Sociotechnical Security as an Analytic Framework - Matt Goerzen, Elizabeth Anne Watkins, Gabrielle Lim 2019